Querying and Data Transformations

Table of contents

- Querying With Flux

- Flux Data Transformations

- Grouping

- Windowing

- Windowing and aggregateWindow()

- Real World Data Example of Grouping

- Aggregations

- Yielding

- Pivoting

- Mapping

- Returning values and arrays

- Reducing

- Manipulating Time

- Regex

- The String Package

- Combining Data Streams

- Accessing External Data Sources

- Materialized Views or Downsampling Tasks

- Further Reading

Querying With Flux

In the vernacular of Flux, a Flux script is called a “query.” This is despite the fact that you can write valid and useful Flux that doesn’t even query your own data at all. For our purposes now, we can think of writing a query as retrieving targeted data from the storage engine, as opposed to transforming and shaping the data, which will be discussed in detail in the following sections.

As described in the section “Just Enough Flux” in the previous chapter, you can see that a typical simple query involves 3 parts:

- The source bucket

- The range of time

- A set of filters

Additionally, a query may contain a yield() statement depending on circumstances.

from()

In most cases, a query starts by specifying a bucket to query from using the bucket name:

from(bucket: "bucket1")

In some cases, you may wish to use the bucket’s id instead:

from(bucketID: "497b48e409406cc7")

Typically, developers will address a bucket by its name for a few reasons. First, of course the bucket name is much more readable, the role of the bucket can be encoded in the name. Additionally, there may be times when deleting a bucket and creating a new one with the same name is the most expedient way to delete data. Addressing the bucket by its id has the advantage of being immutable. Someone can change the bucket name, and the query using the id will continue working.

There are cases that will be described below where you use a different kind of “from”, for example sql.from() or csv.from() or array.from() to bring in data from other sources.

range()

The range function is required directly after from()and its purpose is to specify the points to include based on their timestamps. range() has only two parameters.

start and stop

An argument for start is required, whereas stop is optional. In the case where you leave out an argument for stop, Flux will substitute now(), which is the current time when execution is scheduled.

now()

now() always returns the time when a Flux script is scheduled to start execution. This has some important implications:

- If your script is not run as part of a task, now() will return the time at the very start of execution of the script. If there are any delays, for example due to queuing as a result of excessive load, etc… now() will the time when the script was scheduled to run.

- If your script is running as part of a task, now() will return the time that your script was scheduled to run.

- Every call to now() in the script will return the same time.

Calling range() with Relative Durations

Possibly the most common way to use the range function is to use a start time like this:

range(start: -5m)

This says to provide all of the data that is available starting five minutes ago. This is inclusive, meaning that any data that is timestamped exactly with the nanosecond exactly five minutes ago will be included. Any data that is five minutes and one nanosecond older or more will not be included.

Conversely, stop is exclusive. That is to say that if you have any data that is timestamped exactly with the stop argument, it will NOT be included with the results.

So, for example, if there is data that is timestamped precisely 1 minute ago, and you have the following queries, that data will be included in the second query, but not the first.

from(bucket: "bucket1")

|> range(start: -2m, stop: -1m)

from(bucket: "bucket1")

|> range(start: -1m)

When a stop argument is not supplied Flux simply substitutes now(). So the following queries are equivalent:

from(bucket: "bucket1")

|> range(start: -1m, stop: now())

from(bucket: "bucket1")

|> range(start: -1m)

However, this is not true when the start time is in the future. This can happen if your timestamps are, for some reason, post-dated. If your start time is in the future, than now() is, logically before the start time, so this will cause an error:

from(bucket: "bucket1")

|> range(start: 1m)

Simply support a stop duration that is later than the start to ensure that it works.

from(bucket: "bucket1")

|> range(start: 1m, stop: 2m)

A duration is a type in Flux. So it is unquoted, and consists of a signed integer and unit. The following duration units are supported:

1ns // 1 nanosecond

1us // 1 microsecond

1ms // 1 millisecond

1s // 1 second

1m // 1 minute

1h // 1 hour

1d // 1 day

1w // 1 week

1mo // 1 calendar month

1y // 1 calendar year

So, for example, to select a week’s worth of data starting two weeks in the past, you can use relative durations like this:

|> range(start: -2w, stop: -1w)

Durations represent a span of time, not a specific time. Therefore, Flux does not understand things like:

|> range(start: now() - 5m)

That will result in an error because now() returns a specific time, whereas 5m represents a span of time. The types are not compatible. It is possible to do calculations based on times and durations, and this will be covered in detail in a later section.

Durations are not addable, either, so the following will throw an error:

|> range(start: -5m + -2m)

Defining Ranges with Integers

The start and stop parameters also accept integers. For example, you have already seen:

|> range(start: 0)

The integer represents the nanoseconds that have transpired since Thursday, January 1, 1970 12:00:00 AM, GMT, also known as “Unix Time.”

This is extremely useful, as many systems with which you may want to integrate natively use Unix Time. For example, Fri Jan 01 2021 06:00:00 GMT+0000 is represented as 1609480800000 in Unix time. However, in this case, notice that the time here is represented as milliseconds, not nanoseconds. To perform this conversion, simply multiply the milliseconds by 1,000,000, or you can define the precision when you write the data to the database.

So, for all of the data starting from Jan 1, 2021:

from(bucket: "bucket1")

|> range(start: 1609480800000000000)

Unlike durations, integers are, of course, addable, so, to go back a year, this would work:

|> range(start: -365 * 24 * 60 * 60 * 100000000)

As with durations, if you supply an integer in the future, you must supply a stop time that is later.

Defining Ranges with Times

The third type that is accepted by start and stop is a time. A time object is expressed as RFC3339 timestamps. For example the following all represent the start of Unix Time:

- 1970-01-01

- 1970-01-01T00:00:00Z

- 1970-01-01T00:00:00.000Z

So, to get data from the start of some day to now:

from(bucket: "bucket1")

|> range(start: 2021-07-27)

To get data for some day in the past:

from(bucket: "bucket1")

|> range(start: 2021-07-25, stop: 2021-07-26)

By adding the “T” you can get arbitrarily fine grained resolution as well. For example, to skip the first nanosecond:

from(bucket: "bucket1")

|> range(start: 2021-07-27T00:00:00.0000000001Z)

If you only care about seconds, you can leave off the fraction:

from(name: "bucket1")

|> range(start: 2021-07-27T00:00:01Z)

Calculating Start and Stop Times

It is possible to compute start and stop times for the range.

<something here about subtracting time and adding time>

Start and Stop Types Can Be Different

The start and stop parameters do not require the same type to be used. The following work fine.

from(bucket: "operating-results")

|> range(start: -3d, stop: 2021-07-26)

from(bucket: "operating-results")

|> range(start: 1627347600000000000, stop: -1h)

filter()

A filter function must either implicitly or explicitly return a boolean value. A filter function operates on each row of each table, and in cases where there return value is true, the row is retained in the table. In cases where the return value is false, the row is removed from the table.

Filter Basics

A very common filter is to filter by measurement.

filter(fn: (r) => r._measurement == "measurement1")

The actual function is the argument for the fn parameter:

(r) => r._measurement == "measurement1"

“(r)” is the parameter list. A filter function always expects to only have a single parameter, and for it to be called “r.” Then the function body is a simple boolean expression that will evaluate to true or false. This function will return true when the _measurement for a row is “sensors” and so therefore the function will emit a stream of tables where all of the data has the sensor measurement.

Naturally, you can omit the sensors measurement in the same manner:

filter(fn: (r) => r._measurement != "measurement1")

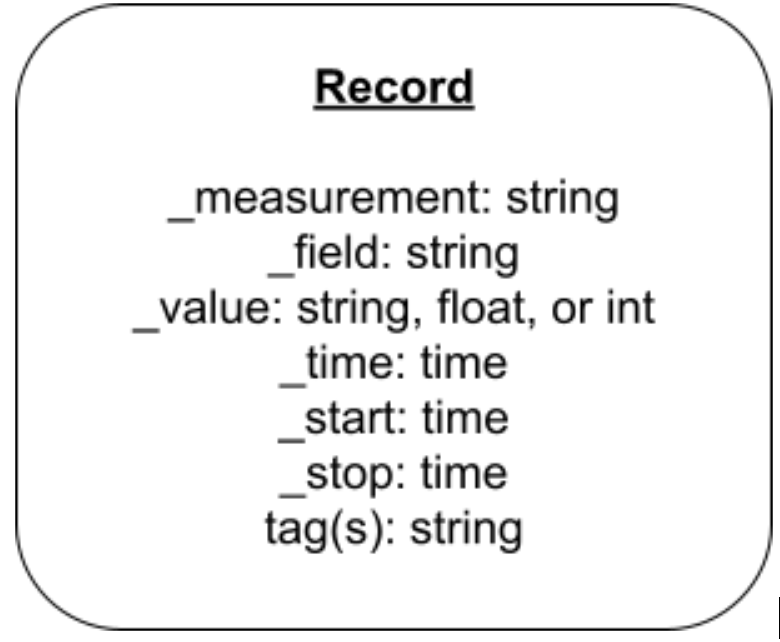

Anatomy of a Row

When read from the storage engine and passed into the filter function, by default, before being transformed by other functions, every row has the same essential object model. Flux uses a leading underscore (“_”) to delineate reserved member names. In Flux, each member of a row is called a “column,” or sometimes a “field” depending on the context.

We can see how this is generally represented as a row in Flux.

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | fieldname1 | 1.0 | rfc3339time1 |

r._measurementis a string that is the measurement which defines the table that row is saved into.r._fieldis a string that is the name of the field which defines the table that the row is saved into.r._valueis the actual value of the field.r._timeis the time stamp of the row.

Additionally, each tag value is accessible by its tag name. For example, r.tag1, which in this example has a value of “tagvalue1.”

Finally, there are two additional context specific members added. These members are determined by the query, not the underlying data:

r._startis the start time of the range() in the query.r._stop()is the stop time of the range() in the query.

For example, if you query with a range of 5 minutes in the past (range(start: -5m)), you will get a _start and _stop 5 minutes apart:

| _measurement | tag1 | _field | _value | _start | _stop | _time |

| measurement1 | tagvalue1 | fieldname1 | 1.0 | 2021:08:20T20:00:000000000Z | 2021:08:20T20:05:000000000Z | rfc3339time1 |

When you are filtering, you therefore have all of these columns to work from.

Filtering Measurements

A discussed in the data model section above, a measurement is the highest order aggregation of data inside a bucket. It is, therefore, the most common subject, and typically first, filter, as it filters out the most irrelevant data in a single statement.

Additionally, every table written by the storage engine has exactly one measurement, so the storage engine can quickly find the relevant tables and return them.

filter(fn: (r) => r._measurement == "measurement1")

Given the two tables below, only the first will be returned if the preceding filter is applied:

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

| measurement1 | tagvalue1 | field1 | 2i | rfc3339time2 |

| _measurement | tag1 | _field | _value | _time |

| measurement2 | tagvalue1 | field1 | 1.0 | rfc3339time1 |

| measurement2 | tagvalue1 | field1 | 2.0 | rfc3339time2 |

Filtering Tags

Multiple measurements can share the same tag set. As such, filtering by tag is sometimes secondary to filtering by measurement. The storage engine keeps track of where the tables with different tags for specific measurements are, so filtering by tag is typically reasonably fast.

The following tables have different measurements, but the same tag values, so the following filter will return both tables:

|> filter(fn: (r) => r.tag1 == "tagvalue1")

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

| measurement1 | tagvalue1 | field1 | 2i | rfc3339time2 |

| _measurement | tag1 | _field | _value | _time |

| measurement2 | tagvalue1 | field1 | 1.0 | rfc3339time1 |

| measurement2 | tagvalue1 | field1 | 2.0 | rfc3339time2 |

If you only want one measurement with that tag value, you can simply include both filters. The following will return only the first table:

|> filter(fn: (r) => r._measurement == "measurement1")

|> filter(fn: (r) => r.tag1 == "tagvalue1")

Filtering by Field

Filtering by field is extremely common, and also very fast, as fields are part of the group key of tables. Given the following table, if you are interested in records in field1 in measurement1, you can simply query like so, and get back only the first table:

|> filter(fn: (r) => r._field == "field1")

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

| measurement1 | tagvalue1 | field1 | 2i | rfc3339time2 |

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field2 | 1.0 | rfc3339time1 |

| measurement1 | tagvalue1 | field2 | 2.0 | rfc3339time2 |

| _measurement | tag1 | _field | _value | _time |

| measurement2 | tagvalue1 | field2 | 3.0 | rfc3339time1 |

| measurement2 | tagvalue1 | field2 | 4.0 | rfc3339time2 |

However, this won’t work for field2, as that field name exists in measurement2 as well. Simply include a measurement filter as well:

|> filter(fn: (r) => r._measurement == "measurement1")

|> filter(fn: (r) => r._field == "field2")

This will return only the first table.

Filter by Exists

There may be circumstances where you wish to only operate on tables that contain a specific tag value. You can use exists or not exists for this.

The following will ensure that only tables which contain the “tag1” are returned:

|> filter(fn: (r) => exists r.tag1)

Similarly, if you want to retain only tables that do not conain the “tag1”, use:

|> filter(fn: (r) => not exists r.tag1)

To illustrate that point, take the following two tables. Each record has a different time stamp. The second table differs only in that those points were recorded with an additional tag, “tag2”.

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time3 |

| measurement1 | tagvalue1 | field1 | 2i | rfc3339time4 |

| _measurement | tag1 | tag2 | _field | _value | _time |

| measurement1 | tagvalue1 | tagvalue2 | field1 | 3i | rfc3339time1 |

| measurement1 | tagvalue1 | tagvalue2 | field1 | 4i | rfc3339time2 |

The following query will return the first table:

> filter(fn: (r) => not exists r.tag2) |

If you only wanted to return the second table with the points that lack the “tag2”, you can use not exists. Instead you must must drop that column all together. We’ll cover that in more detail in later sections.

Filtering by Field Value

Filtering by measurement(s), tag(s), or field(s) removes entire tables from the response. You can also filter out individual rows in tables. The most common way to do this is to filter by value.

For example, if we take our few rows of air sensor data, and first filter by field:

|> filter(fn: (r) => r._field == "fieldname1")

We are left with these two tables.

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1.0 | rfc3339time1 |

| measurement1 | tagvalue1 | field1 | 2.0 | rfc3339time2 |

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue2 | field1 | 3.0 | rfc3339time1 |

| measurement1 | tagvalue2 | field1 | 3.0 | rfc3339time2 |

If we also add a filter for value, we can filter out individual rows. For example:

|> filter(fn: (r) => r._field == "field1")

|> filter(fn: (r) => r._value >= 2.0)

Will result in the following stream of tables being emitted:. Note that the first table has dropped a single row:

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 2.0 | rfc3339time2 |

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue2 | field1 | 3.0 | rfc3339time1 |

| measurement1 | tagvalue2 | field1 | 3.0 | rfc3339time2 |

The row where the field value was less than 2 was dropped.

Compound Filters

Boolean expressions in Flux can be compounded with “or” and “and.” For example, to retrieve all the tables with either the fields temperature or humidity, but no others, you use:

|> filter(fn: (r) => r._field == "field1" or r._field == "filed2")

You can aggregate with or using different members of r if needed:

|> filter(fn: (r) => r._field == "field1" or exist r.tag1)

You can use and as well:

|> filter(fn: (r) => r._field == "field1" and r.tag1 == "tagvalue1")

However, this is less commonly used because it is equivalent to simply supplying two filters. The follow two filters is equivalent to, and arguably easier to read and modify:

|> filter(fn: (r) => r._field == "field1")

|> filter(fn: (r) => r.sensor_id == "tagvalue1")

Regular Expressions

Sometimes your code will need to find substrings or even more complex pattern matching. Flux supports regular expressions for this purpose.

There are two regex operators in Flux, “=~” for matches, and “!~” for does not match. The operators expect a string on the left side, and regular expression on the right. You define a regular expression object by surrounding your regex in “/.” For example to find all values of tag1 that include the string “tag”. You can use:

|> filter(fn: (r) => r.tag1 =~ /tag/)

In this case, the regex operator is “matches”, i.e. find all of the tag1 values that match the regex, and the regex itself is tag. Every table where the tag1 tag value contains the string “tag” will be returned.

To exclude all such tables, simply use the “does not match” version:

|> filter(fn: (r) => r.tag1 !~ /tag/)

Flux supports the full range of regex expressiveness. To match the pattern of 3 capital letters and 4 digits:

|> filter(fn: (r) => r._value =~ /[A-Z]{3}[0-9]{4}/)

These operators work on any field that is of type string. So you can use this to filter by measurement, field name, and even field value when the field value is a string type.

However, it is important to note that the Flux storage engine cannot leverage the layout of tables when using regular expressions, so it must often scan every table, or even every row, to find matches. This can cause your queries to run much more slowly. Therefore, if you are regularly using regular expressions to filter your data, consider adding additional tags instead.

If, Then, Else

Flux also supports “if, then, else” statements. This can be useful if you want to express more complex conditions in a readable manner.

The following two filters are equivalent:

filter(fn: (r) => if r._value < 2.0 then true else false)

filter(fn: (r) => r._value < 1.0)

Naturally, you can rather return the result of a boolean expression:

filter(fn: (r) => if r.tag1 == "tagvalue1" then r._value < 2.0 else false)

If then else can also be chained:

filter(fn: (r) => if r.tag1 == "tagvalue1"

then r._value < 2.0

else if r.tag1 == "tagvalue2"

then r._value < 3.0

else false)

Types In Comparisons

As a strongly typed language, in general, Flux does not support comparisons between variables with different types.

Flux does support comparing integers to floats:

int( v: "1") == 1.0

But does not support comparing other data types. For example, this will cause an error:

"1" == 1.0

unsupported binary expression string == float

Times can be compared:

2021-07-12T19:38:00.000Z < 2021-07-12T19:39:00.000Z

But cannot be compared to, for example, Unix Time integers:

2021-07-12T19:38:00.000Z < 1627923626000000000

unsupported binary expression time < int

But this can be done with some explicit casting:

uint(v: 2021-07-12T19:38:00.000Z) < 1627923626000000000

Details on how to cast time between different formats so that you calculate and compare time and durations from different formats is covered in detail in a future section.

Queries and the Data Model

This section focused on queries that only found and filtered data. As such, the results are a subset of tables and a subset of rows of the tables stored in the storage engine. There may be few tables, but the only change to the tables returned is that there may be rows filtered out.

In other words, the pattern from() |> range() |> filter() will not transform your data as stored on disk other than perhaps filtering it. The next section will go further and delve into many of the options for transforming the shape of the data.

Flux Data Transformations

In addition to retrieving data from disk, Flux is a powerful data transformation tool. You can use Flux to shape your data however needed, as well as apply powerful mathematical transformations to your data as well.

Grouping

To review, when you write data to InfluxDB, the storage engine persists it in tables, where each table is defined by a “group key.” The group key used to persist the data is a measurement name, a unique set of tag values, and a field name.

Consider the following example of 12 tables with two rows each, all containing the same measurement, but there are:

- Two tags, with a total of 5 tag values

- tag1 has the 2 tag values:

- tagvalue1

- tagvalue4

- tag2 has 3 tag values:

- tagvalue2

- tagvalue3

- tagvalue5

- tag1 has the 2 tag values:

- Two fields

- field1

- field2

Where the line protocol would look like:

measurement,tag1=tagvalue1,tag2=tagvalue2 field1=0.0 unixtime1

measurement,tag1=tagvalue1,tag2=tagvalue2 field1=1.0 unixtime2

measurement,tag1=tagvalue4,tag2=tagvalue2 field1=0.0 unixtime1

measurement,tag1=tagvalue4,tag2=tagvalue2 field1=1.0 unixtime2

measurement,tag1=tagvalue1,tag2=tagvalue3 field1=0.0 unixtime1

measurement,tag1=tagvalue1,tag2=tagvalue3 field1=1.0 unixtime2

measurement,tag1=tagvalue4,tag2=tagvalue3 field1=0.0 unixtime1

measurement,tag1=tagvalue4,tag2=tagvalue3 field1=1.0 unixtime2

measurement,tag1=tagvalue1,tag2=tagvalue5 field1=0.0 unixtime1

measurement,tag1=tagvalue1,tag2=tagvalue5 field1=1.0 unixtime2

measurement,tag1=tagvalue4,tag2=tagvalue5 field1=0.0 unixtime1

measurement,tag1=tagvalue4,tag2=tagvalue5 field1=1.0 unixtime2

measurement,tag1=tagvalue1,tag2=tagvalue2 field2=0.0 unixtime1

measurement,tag1=tagvalue1,tag2=tagvalue2 field2=1.0 unixtime2

measurement,tag1=tagvalue4,tag2=tagvalue2 field2=0.0 unixtime1

measurement,tag1=tagvalue4,tag2=tagvalue2 field2=1.0 unixtime2

measurement,tag1=tagvalue1,tag2=tagvalue3 field2=0.0 unixtime1

measurement,tag1=tagvalue1,tag2=tagvalue3 field2=1.0 unixtime2

measurement,tag1=tagvalue4,tag2=tagvalue3 field2=0.0 unixtime1

measurement,tag1=tagvalue4,tag2=tagvalue3 field2=1.0 unixtime2

measurement,tag1=tagvalue1,tag2=tagvalue5 field2=0.0 unixtime1

measurement,tag1=tagvalue1,tag2=tagvalue5 field2=1.0 unixtime2

measurement,tag1=tagvalue4,tag2=tagvalue5 field2=0.0 unixtime1

measurement,tag1=tagvalue4,tag2=tagvalue5 field2=1.0 unixtime2

I encourage you to replace the metasyntax timestamps with actual unix timestamps and try out the grouping on your own. You can use can use this unix timestamp converter to get two unix timestamps of your choice or you can use the following two values:

1628229600000000000 (or 2021-08-06T06:00:00.000000000Z)1628229900000000000 (or 2021-08-06T06:05:00.000000000Z)

Then write the data to InfluxDB with the CLI, API, or InfluxDB UI.

The following query will return the following 12 separate tables:

from(bucket: "bucket1")

|> range(start: 0)

Note that an extra row has been added to each table to denote if each column is part of the group key. The start column has been removed from the table response for simplicity. The table column has been added to help you keep track of the number of columns. Remember, the group key for the table column is an exception and it’s always set to false. The group key for the table is set to false because users can’t directly change the table number. The table record will always be the same across rows even though the group key is set to false.

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 2 | measurement1 | field1 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 2 | measurement1 | field1 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 3 | measurement1 | field1 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 3 | measurement1 | field1 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 4 | measurement1 | field1 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 4 | measurement1 | field1 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 5 | measurement1 | field1 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 5 | measurement1 | field1 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

Aaa

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 6 | measurement1 | field2 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 6 | measurement1 | field2 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 7 | measurement1 | field2 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 7 | measurement1 | field2 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 8 | measurement1 | field2 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 8 | measurement1 | field2 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 9 | measurement1 | field2 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 9 | measurement1 | field2 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 10 | measurement1 | field2 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 10 | measurement1 | field2 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 11 | measurement1 | field2 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 11 | measurement1 | field2 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

By default each field name will be in a separate table, and then there are 6 unique combinations of tag values grouped with each field:

- tagvalue1 and tagvalue2

- tagvalue4 and tagvalue2

- tagvalue1 and tagvalue3

- tagvalue4 and tagvalue3

- tagvalue1 and tagvalue5

- tagvalue4 and tagvalue5

group()

The group() function can be to redefine the group keys, which will then result in regrouping the tables. We can begin by examining how defined the group key to a single column can affect the tables.

from(bucket: "bucket1")

|> range(start: 0)

|> group(columns: ["tag1"])

We know that there are 2 tag values for tag1 (tagvalue1 and tagvalue4), so we can predict that there will be two tables after the grouping:

| Not In Group Key | Not In Group Key | Not In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | Not In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

If we group by both tags, then we can predict that there will be 6 tables because, as described above, there are three unique combinations of tag values:

from(bucket: "bucket1")

|> range(start: 0)

|> group(columns: ["tag1", "tag2"])

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 2 | measurement1 | field1 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 2 | measurement1 | field1 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 3 | measurement1 | field1 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 3 | measurement1 | field1 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 4 | measurement1 | field1 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 4 | measurement1 | field1 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| 4 | measurement1 | field2 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 4 | measurement1 | field2 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 5 | measurement1 | field1 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 5 | measurement1 | field1 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

| 5 | measurement1 | field2 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 5 | measurement1 | field2 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

Grouping by field alone, we can predict that we will see a total of 2 tables, because the data set has only 2 field names.

from(bucket: "bucket1")

|> range(start: 0)

|> group(columns: ["_field"])

| Not In Group Key | Not In Group Key | In Group Key | Not In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | Not In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | measurement1 | field2 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field2 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

Any combination of columns can be used for grouping depending on your purposes. For example, we can ask for tables for each field with each value for tag1. We can predict that there will be 4 such tables, because there are two fields and two tag values for tag1:

from(bucket: "bucket1")

|> range(start: 0)

|> group(columns: ["_field", "tag1"])

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 1 | measurement1 | field1 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 2 | measurement1 | field2 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| Not In Group Key | Not In Group Key | In Group Key | In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 3 | measurement1 | field2 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

group() and Type Conflicts

In the above example, the values for field1 and field2 were always floats. Therefore, when grouping both of those fields into the same tables, it worked. However, recall that a column in InfluxDB can only have one type.

Given the following two tables which differ only in that they have a different field name, and their field values have a different type:

| table | _measurement | _field | _value | _time |

| 0 | measurement1 | field1 | 1i | rfc3339time2 |

| table | _measurement | _field | _value | _time |

| 1 | measurement1 | field2 | 1.0 | rfc3339time2 |

An attempt to group these two tables into the same table will result in the following error:

from(bucket: "bucket1")

|> range(start: 0)

|> group()

schema collision: cannot group integer and float types together

The simplest way to address this is to convert all of the values to floats using the toFloat() function. This function simply converts the value field for each record into a float if possible.

from(bucket: "bucket1")

|> range(start: 0)

|> toFloat()

|> group()

| table | _measurement | _field | _value | _time |

| 0 | measurement1 | field1 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | 1.0 | rfc3339time2 |

drop()/keep()

Another way to affect the group keys is to simply remove columns that are in the group key using the drop() or keep() functions. These two functions operate in the same manner, it’s just a matter of supplying a list of columns to eliminate vs. preserve.

Note that the following are equivalent:

from(bucket: "bucket1")

|> range(start: 0)

|> drop(columns: ["tag1","tag2"])

from(bucket: "bucket1")

|> range(start: 0)

|> keep(columns: ["_measurement","_field","_value","_time"])

In both cases the effect is to remove both of the tag columns from the table. Because tags are always in the group key by default, this change will leave only _measurement and _field in the group key. Because there is only one measurement, this will result in grouping solely by _field.

| Not in Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | _value | _time |

| 0 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:00.000000000Z |

| 0 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:01.000000000Z |

| 0 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:02.000000000Z |

| 0 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:03.000000000Z |

| 0 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:04.000000000Z |

| 0 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:05.000000000Z |

| 0 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:00.000000000Z |

| 0 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:01.000000000Z |

| 0 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:02.000000000Z |

| 0 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:03.000000000Z |

| 0 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:04.000000000Z |

| 0 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:05.000000000Z |

| Not in Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| 1 | _measurement | _field | _value | _time |

| 1 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:00.000000000Z |

| 1 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:01.000000000Z |

| 1 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:02.000000000Z |

| 1 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:03.000000000Z |

| 1 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:04.000000000Z |

| 1 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:05.000000000Z |

| 1 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:00.000000000Z |

| 1 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:01.000000000Z |

| 1 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:02.000000000Z |

| 1 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:03.000000000Z |

| 1 | measurement1 | field1 | 0.0 | 2021-08-17T21:22:04.000000000Z |

| 1 | measurement1 | field1 | 1.0 | 2021-08-17T21:22:05.000000000Z |

Note that drop() and keep() are both susceptible to the same type conflicts that can cause errors with group().

rename()

The rename() function does not change the group key, but simply changes the names of the columns. It works by providing the function with a mapping of old column names to new column names.

Given the following very simple table:

| _measurement | tag1 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

The following code simply renames the tag1 column:

from(bucket: "bucket1")

|> range(start: 0)

|> rename(columns: {"tag1":"tag2"})

| _measurement | tag2 | _field | _value | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

You can rename any column which can be very useful for things like formatting data to present to users. However, renaming can have some unintended consequences. For example, if you rename the _value column, certain functions will have surprising results or fail because they operate on _value.

from(bucket: "bucket1")

|> range(start: 0)

|> rename(columns: {"_value":"astring"})

| _measurement | tag1 | _field | astring | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

from(bucket: "bucket1")

|> range(start: 0)

|> rename(columns: {"_value":"astring"})

|> toFloat()

| _measurement | tag1 | _field | _value | astring | _time |

| measurement1 | tagvalue1 | field1 | 1i | rfc3339time1 |

In this case, because there was no _value column to convert from, the toFloat() method had no data to place in the _value column that it creates.

Creating a Single Table or Ungrouping

Finally, it is possible to put all of the data into a single table assuming that you avoid type conflicts. This is achieved by using the group() function with no arguments. Basically making the group key empty, so all of the data gets grouped into a single table. This is effectively the same as ungrouping.

from(bucket: "bucket1")

|> range(start: 0)

|> group()

| Not In Group Key | Not In Group Key | In Group Key | Not In Group Key | Not In Group Key | Not in Group Key | Not in Group Key |

| table | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field1 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue4 | tagvalue2 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue4 | tagvalue2 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue4 | tagvalue3 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue4 | tagvalue3 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue1 | tagvalue5 | 1.0 | rfc3339time2 |

| 0 | measurement1 | field2 | tagvalue4 | tagvalue5 | 0.0 | rfc3339time1 |

| 0 | measurement1 | field2 | tagvalue4 | tagvalue5 | 1.0 | rfc3339time2 |

Windowing

Windowing is when you group data by the start and stop times with the window() function. In previous sections the _start and _stop columns have been omitted for simplicity because they usually represent the start and stop times defined in the range function and their values remain unchanged. However, the window() function affects the values of the _start and _stop columns so we’ll include these columns in this section. The window() function doesn’t affect the _time column, so we’ll exclude this column to simplify the examples in this section. To illustrate how the window() function works let’s filter our data for tagvalue1 and tagvalue4 and include the _start and _stop columns:

from(bucket: "bucket1")

|> range(start: 2021-08-17T00:00:00, stop:2021-08-17T3:00:00 )

|> filter(fn: (r) => r["tag1"] == "tagvalue1" or r["tag1"] == "tagvalue4")

|> filter(fn: (r) => r["_field"] == "field1")

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | 2021-08-17T01:00:00 |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | 2021-08-17T02:00:00 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | 2021-08-17T00:00:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | 2021-08-17T01:00:00 |

| 1 | 2021-08-17T00:00:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | 2021-08-17T02:00:00 |

If you apply the window() function the values in the _start and _stop column will change to reflect the defined window period:

from(bucket: "bucket1")

|> range(start: 2021-08-17T00:00:00, stop:2021-08-17T3:00:00 )

|> filter(fn: (r) => r["tag1"] == "tagvalue1" or r["tag1"] == "tagvalue4")

|> filter(fn: (r) => r["tag2"] == "tagvalue2")

|> window(period: 90m)

// the following syntax is synonymous with the line above |> window(every: 90m)

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | 2021-08-17T01:00:00 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | 2021-08-17T01:00:00 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 2 | 2021-08-17T01:30:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | 2021-08-17T02:00:00 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 3 | 2021-08-17T01:30:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | 2021-08-17T02:00:00 |

The boundary for the window period, as defined by either the period or every paramenter, is not based on execution time or the timestamps of any points returned by the range function. Instead windowing occurs at the top of the second, minute, hour, month, year. Additionally, The window boundaries or groupings won’t exceed the timestamps of the data you return from the range function. For example, imagine the following scenario: You query for data with |> range(start: 2022-01-20T20:18:00.000Z, stop: 2022-01-20T21:19:25.000Z) and your last record has a timestamp in that range of 2022-01-20T20:19:20.000Z. You’re every/periodduration is 30s. Then your last window group will have a _start value of 2022-01-20T20:19:00.000Z and a _stop of value of 2022-01-20T20:19:30.000Z. Notice how window grouping does not extend until the stop value specified by the range function. Instead, the grouping stops to include the final point.

Windowing is performed for two main reasons:

- To aggregate data across fields or tags with timestamps in the same period.

- To transform high precision series into a lower resolution aggregation.

To aggregate data across fields or tags with similar timestamps, you can first apply the window() function like above, then you can group your data by the _start times. Now data that’s in the same window will be in the same table, so you can apply an aggregation after:

from(bucket: "bucket1")

|> range(start: 2021-08-17T00:00:00, stop:2021-08-17T3:00:00 )

|> filter(fn: (r) => r["tag1"] == "tagvalue1" or r["tag1"] == "tagvalue4")

|> window(period: 90m)

|> group(columns: ["_start"], mode:"by")

|> yield(name:"after group")

|> sum()

|> yield(name:"after sum")

The result after the first yield, “after group” looks like:

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 | 2021-08-17T01:00:00 |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | tagvalue4 | tagvalue2 | 0.0 | 2021-08-17T01:00:00 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value | _time |

| 1 | 2021-08-17T01:30:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 1.0 | 2021-08-17T02:00:00 |

| 1 | 2021-08-17T01:30:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue4 | tagvalue2 | 1.0 | 2021-08-17T02:00:00 |

The result after the first yield, “after sum” looks like:

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 0.0 |

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | tag1 | tag2 | _value |

| 1 | 2021-08-17T01:30:00 | 2021-08-17T03:00:00 | measurement1 | field1 | tagvalue1 | tagvalue2 | 2.0 |

The sum() function is an aggregator so the _time column is removed because there isn’t a timestamp associated with the sum of two values. Keep in mind that in this example the timestamps in the _time column in the “after group” output happen to be the same, but this aggregation across fields within time windows would work even if the timestamps were different. The _time column isn’t a part of the group key.

Windowing back in time

When you apply the window() function, you group data on forward in time or create window bounds that align with the start of your data. You can’t window back it time, but you can produce the same effect by using the offset parameter. This parameter specifies the duration to shift the window boundaries by. You can use the offset parameter to make the windows always align with the current time which effectively groups the data backwards in time.

option offset = duration(v: int(v: now()))

data = from(bucket: "bucket1")

|> range(start: -1m)

|> filter(fn: (r) => r["_measurement"] == "measurement1")

|> yield(name: "data")

data

|> aggregateWindow(every: 30s, fn: mean, createEmpty: false, offset: offset)

|> yield(name: "offset effectively windowing backward in time")

Alternatively, you could calculate the duration difference between your actual start time and the required start time so that your windows align with stop time instead. Then you could add that duration to the start with the offset parameter. The previous approach is the recommended approach, but examining multiple approaches lends us an appreciation for the power and flexibility that Flux provides.

data = from(bucket: "bucket1")

|> range(start: 2021-08-19T19:23:37.000Z, stop: 2021-08-19T19:24:18.000Z )

|> filter(fn: (r) => r["_measurement"] == "measurement1")

lastpoint = data |> last() |> findRecord(fn: (key) => true , idx:0 )

// the last point is 2021-08-19T19:24:15.000Z

firstpoint = data |> first() |> findRecord(fn: (key) => true , idx:0 )

// the first point is 2021-08-19T19:23:40.000Z

time1 = uint(v: firstpoint._time)

// 1629401020000000000

time2 = uint(v: lastpoint._time)

// 1629401055000000000

mywindow = uint(v: 15s)

remainder = math.remainder(x: float(v:time2) - float(v:time1), y: float(v:mywindow))

// remainder of (1629401055000000000 - 1629401055000000000)/15000000000 = 5000000000

myduration = duration(v: uint(v: remainder))

//5s

data

|> aggregateWindow(every: 15s, fn: mean, offset: myduration)

Windowing and aggregateWindow()

The most common reason for using the window() function is to transform high precision data into lower resolution aggregations. Simply applying a sum() after a window would calculate the sum of the data for each series within the window period. To better illustrate window() function let’s look at the following simplified input data:

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | _value | _time |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | 0.0 | 2021-08-17T00:30:00 |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | 1.0 | 2021-08-17T01:00:00 |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | 2.0 | 2021-08-17T01:30:00 |

| 0 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | 3.0 | 2021-08-17T02:00:00 |

The _time column has been removed because the window() function doesn’t affect the values of the _time column. It only affects the values of the _start and _stop columns. The window() function calculates windows of time based of the duration specified with the period or every parameter and groups the records based on the bounds of that window period. The following query would return one table with the sum for all the points in the series within a 90 min window:

from(bucket: "bucket1")

|> range(start: 2021-08-17T00:00:00, stop:2021-08-17T3:00:00 )

|> filter(fn: (r) => r["_field"] == "field1")

|> window(period: 90m)

|> sum()

| Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | Not in Group Key |

| table | _start | _stop | _measurement | _field | _value |

| 1 | 2021-08-17T00:00:00 | 2021-08-17T01:30:00 | measurement1 | field1 | 5.0 |

By using the window() function following an aggregation function, we’ve reduced the number of points in our series by half. We’ve transformed a higher resolution data set into a lower resolution sum over 90 min windows. This combination of functions introduces another similar function, the aggregateWindow() function.

The aggregateWindow() function windows data and applies an aggregate function or selector function to the data. You can think of the aggregateWindow() function as being a combination of the window() function followed by an aggregate or selector function. The difference between the window() function and the aggregateWindow() function is that the aggregateWindow() function applies a group to your data at the end so that your lower resolution aggregations aren’t separated into different tables by their window period. Instead all lower resolution aggregations are grouped together. In other words, these two queries are equivalent:

query1 = from(bucket: "bucket1")

|> range(start: 2021-08-17T00:00:00, stop:2021-08-17T3:00:00 )

|> filter(fn: (r) => r["_field"] == "field1" or r["_field"] == "field2" )

|> window(period: 90m)

|> sum()

|> group(columns: ["_field"],mode:"by")

query2 = from(bucket: "bucket1")

|> range(start: 2021-08-17T00:00:00, stop:2021-08-17T3:00:00 )

|> filter(fn: (r) => r["_field"] == "field1" or r["_field"] == "field2" )

|> aggregateWindow(every: 90m, fn: sum)

Real World Data Example of Grouping

Having reviewed grouping and aggregation using clean instructive data, it is worthwhile to review the concepts again, but looking at real world data. The NOAA buoy data is a good sample set to look at due to its complexity.

For example, to take a closer look at wind speeds, the following query will simply return all of the tables with the field “wind_speed_mps.”

from(bucket: "noaa")

|> range(start: -12h)

|> filter(fn: (r) => r["_field"] == "wind_speed_mps")

This query reads back data for a total of around 628 tables (this value will be more or less depending on the time range queried). Due to the combination of tag values and the restricted time range, most of the tables returned have only a single row. Here are the first few rows as an example.

Default Grouping

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:35:12.486582468Z | 2021-08-03T15:35:12.486582468Z | 2021-08-03T05:00:00Z | 9 | wind_speed_mps | ndbc | 46303 | Southern Georgia Strait | Environment and Climate Change Canada | International Partners | buoy |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:35:12.486582468Z | 2021-08-03T15:35:12.486582468Z | 2021-08-03T05:00:00Z | 7.7 | wind_speed_mps | ndbc | FWYF1 | Fowey Rock, FL | NDBC | NDBC Meteorological/Ocean | fixed |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:35:12.486582468Z | 2021-08-03T15:35:12.486582468Z | 2021-08-03T04:30:00Z | 2.1 | wind_speed_mps | ndbc | GELO1 | Geneva on the Lake Light, OH | NWS Eastern Region | IOOS Partners | fixed |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:35:12.486582468Z | 2021-08-03T15:35:12.486582468Z | 2021-08-03T05:18:00Z | 4.6 | wind_speed_mps | ndbc | FMOA1 | 8734673 - Fort Morgan, AL | NOAA NOS PORTS | NOS/CO-OPS | fixed |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:35:12.486582468Z | 2021-08-03T15:35:12.486582468Z | 2021-08-03T05:18:00Z | 6.7 | wind_speed_mps | ndbc | TXPT2 | 8770822 - Texas Point, Sabine Pass, TX | TCOON | IOOS Partners | fixed |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:35:12.486582468Z | 2021-08-03T15:35:12.486582468Z | 2021-08-03T05:30:00Z | 2 | wind_speed_mps | ndbc | 45170 | Michigan City Buoy, IN | Illinois-Indiana Sea Grant and Purdue Civil Engineering | IOOS Partners | buoy |

group()

The group() function can be to redefine the group keys, which will then result in entirely different tables. For example:

from(bucket: "noaa")

|> range(start: -12h)

|> filter(fn: (r) => r["_field"] == "wind_speed_mps")

|> group(columns: ["station_type"])

This call to group() tells Flux to make only the single column station_type to be in the set of columns in the group key. station_type has four possible values (“buoy”,”fixed”, “oilrig”, and “other”). As a result, we know that the results will then contain exactly 4 tables. Here are excerpts from those tables:

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 3 | wind_speed_mps | ndbc | 22102 | Korean Meteorological Administration | International Partners | buoy | |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 4 | wind_speed_mps | ndbc | 22103 | Korean Meteorological Administration | International Partners | buoy | |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 2 | wind_speed_mps | ndbc | 22104 | Korean Meteorological Administration | International Partners | buoy | |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 6 | wind_speed_mps | ndbc | 22105 | Korean Meteorological Administration | International Partners | buoy | |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 5 | wind_speed_mps | ndbc | 22106 | Korean Meteorological Administration | International Partners | buoy | |

| ... |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T04:30:00Z | 5 | wind_speed_mps | ndbc | 32ST0 | Stratus | Woods Hole Oceanographic Institution | IOOS Partners | fixed |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 4.1 | wind_speed_mps | ndbc | 62103 | Channel Lightship | UK Met Office | International Partners | fixed |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T04:45:00Z | 0 | wind_speed_mps | ndbc | ACXS1 | Bennetts Point, ACE Basin Reserve, SC | National Estuarine Research Reserve System | NERRS | fixed |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:30:00Z | 5.7 | wind_speed_mps | ndbc | AMAA2 | East Amatuli Island Light, AK | NDBC | NDBC Meteorological/Ocean | fixed |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T04:30:00Z | 0 | wind_speed_mps | ndbc | ANMN6 | Field Station, Hudson River Reserve, NY | National Estuarine Research Reserve System | NERRS | fixed |

| ... |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 2.6 | wind_speed_mps | ndbc | 62114 | Tartan "A" AWS | Private Industry Oil Platform | International Partners | oilrig |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 2.1 | wind_speed_mps | ndbc | 62121 | Carrack AWS | Private Industry Oil Platform | International Partners | oilrig |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 1.5 | wind_speed_mps | ndbc | 62144 | Clipper AWS | Private Industry Oil Platform | International Partners | oilrig |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 2.6 | wind_speed_mps | ndbc | 62145 | North Sea | Private Industry Oil Platform | International Partners | oilrig |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:00:00Z | 1.5 | wind_speed_mps | ndbc | 62146 | Lomond AWS | Private Industry Oil Platform | International Partners | oilrig |

| ... |

| In Group Key | In Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | Not in Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:20:00Z | 9 | wind_speed_mps | ndbc | 41002 | SOUTH HATTERAS - 225 NM South of Cape Hatteras | NDBC | NDBC Meteorological/Ocean | other |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:20:00Z | 6 | wind_speed_mps | ndbc | 41009 | CANAVERAL 20 NM East of Cape Canaveral, FL | NDBC | NDBC Meteorological/Ocean | other |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:20:00Z | 12 | wind_speed_mps | ndbc | 41010 | CANAVERAL EAST - 120NM East of Cape Canaveral | NDBC | NDBC Meteorological/Ocean | other |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:20:00Z | 2 | wind_speed_mps | ndbc | 41013 | Frying Pan Shoals, NC | NDBC | NDBC Meteorological/Ocean | other |

| 2021-08-03T03:50:09.78158678Z | 2021-08-03T15:50:09.78158678Z | 2021-08-03T05:20:00Z | 8 | wind_speed_mps | ndbc | 41040 | NORTH EQUATORIAL ONE- 470 NM East of Martinique | NDBC | NDBC Meteorological/Ocean | other |

| ... |

You may note that _start and _stop are also in the group key. Remember that these columns are added by Flux to specify the time range of the data being returned. For data from a single query, these values will always be the same for all rows, and thus will not change the number of tables.

You can further group by including multiple columns. For example, one can add station_pgm (the name of the partner organization providing the data) to the group key as well:

from(bucket: "noaa")

|> range(start: -12h)

|> filter(fn: (r) => r["_field"] == "wind_speed_mps")

|> group(columns: ["station_type", "station_pgm"])

Now we can see in the returned tables, that because station_type and station_pgm are in the group key, the unique combinations of those values are in separate tables. For example IOOS Partners have both buoy stations and fixed stations, so those different station types are grouped into separate tables.

| In Group Key | In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:08:00Z | 2 | wind_speed_mps | ndbc | 41033 | Fripp Nearshore, SC (FRP2) | CORMP | IOOS Partners | buoy |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:08:00Z | 2 | wind_speed_mps | ndbc | 41037 | Wrightsville Beach Offshore, NC (ILM3) | CORMP | IOOS Partners | buoy |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:00:00Z | 7 | wind_speed_mps | ndbc | 41052 | South of St. John, VI | Caribbean Integrated Coastal Ocean Observing System (CarICoos) | IOOS Partners | buoy |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:00:00Z | 4 | wind_speed_mps | ndbc | 41053 | San Juan, PR | Caribbean Integrated Coastal Ocean Observing System (CarICoos) | IOOS Partners | buoy |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:00:00Z | 6 | wind_speed_mps | ndbc | 41056 | Vieques Island, PR | Caribbean Integrated Coastal Ocean Observing System (CarICoos) | IOOS Partners | buoy |

| ... |

| In Group Key | In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | Not In Group Key | In Group Key | In Group Key |

| _start | _stop | _time | _value | _field | _measurement | station_id | station_name | station_owner | station_pgm | station_type |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T04:30:00Z | 5 | wind_speed_mps | ndbc | 32ST0 | Stratus | Woods Hole Oceanographic Institution | IOOS Partners | fixed |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T04:30:00Z | 7 | wind_speed_mps | ndbc | 41NT0 | NTAS - Northwest Tropical Atlantic | Woods Hole Oceanographic Institution | IOOS Partners | fixed |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:18:00Z | 3.1 | wind_speed_mps | ndbc | ANPT2 | 8775241 - Aransas, Aransas Pass, TX | TCOON | IOOS Partners | fixed |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T05:30:00Z | 4.1 | wind_speed_mps | ndbc | APNM4 | Alpena Harbor Light, Alpena, MI | GLERL | IOOS Partners | fixed |

| 2021-08-03T04:11:46.849771273Z | 2021-08-03T16:11:46.849771273Z | 2021-08-03T04:40:00Z | 1.5 | wind_speed_mps | ndbc | BHRI3 | Burns Harbor, IN | NWS Central Region | IOOS Partners | fixed |

| ... |

drop()/keep()

Another way to affect the group keys is to simply remove columns that are in the group key using the drop() or keep() functions. These two functions operate in the same manner, it’s just a matter of supplying a list of columns to eliminate vs. preserve.

The following are equivalent:

from(bucket: "noaa")

|> range(start: -12h)

|> filter(fn: (r) => r._measurement == "ndbc")

|> filter(fn: (r) => r._field == "wind_speed_mps")

|> drop(columns: ["_measurement","_start","_stop","station_name","station_owner"])

from(bucket: "noaa")

|> range(start: -12h)

|> filter(fn: (r) => r._measurement == "ndbc")

|> filter(fn: (r) => r._field == "wind_speed_mps")

|> keep(columns: ["_field","_value","_time","station_id","station_pgm", "station_type"])

The first thing to notice is that applying keep() cleans up the data dramatically, making it much easier to read and work with. A common use of drop() and keep() therefore, is just to make the data more readable.

However, if you drop a column that is in the group key, this will impact the tables. For example, the query above results in 559 tables, because it leaves station_id, station_pgm, and station_type all in the group key, and the combination of those unique sets of tag values, adds up to 559 different combinations.

If we also drop the station_id, this drops the tag with the most unique values:

from(bucket: "noaa")

|> range(start: -12h)