监控和管理 InfluxDB

目录

运行监控模板

假设您正在使用 InfluxDB Cloud,并且正在向您的帐户写入数百万个指标。您还运行着各种下采样和数据转换任务。无论您是在 InfluxDB 之上构建 IoT 应用程序,还是使用 InfluxDB 监控您的生产环境,您的时间序列操作最终都能顺利运行。您希望保持这种状态。您可能是 免费计划 Cloud 用户或 基于使用量的计划用户,但无论哪种方式,您都需要了解您的实例大小以管理资源和成本。使用 InfluxDB 运行监控模板 来防止失控的序列基数,并确保您的任务成功。

安装和使用运行监控模板

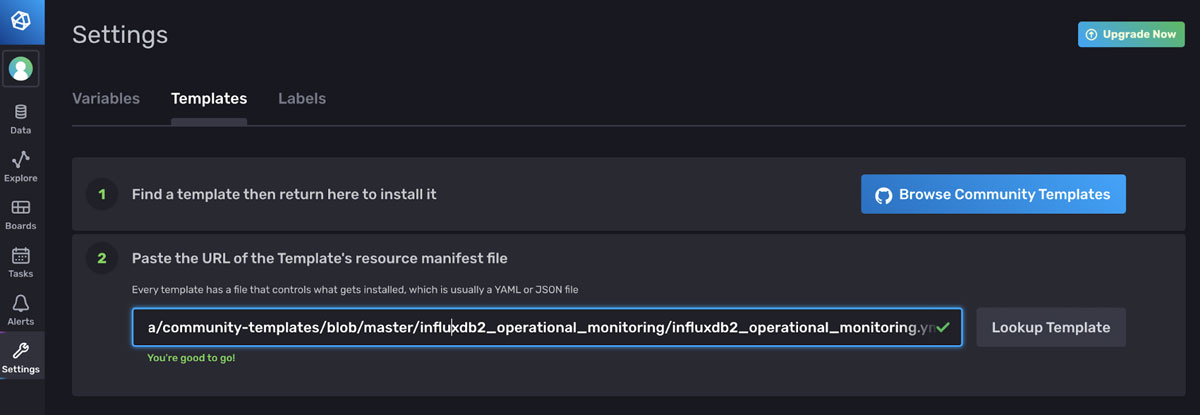

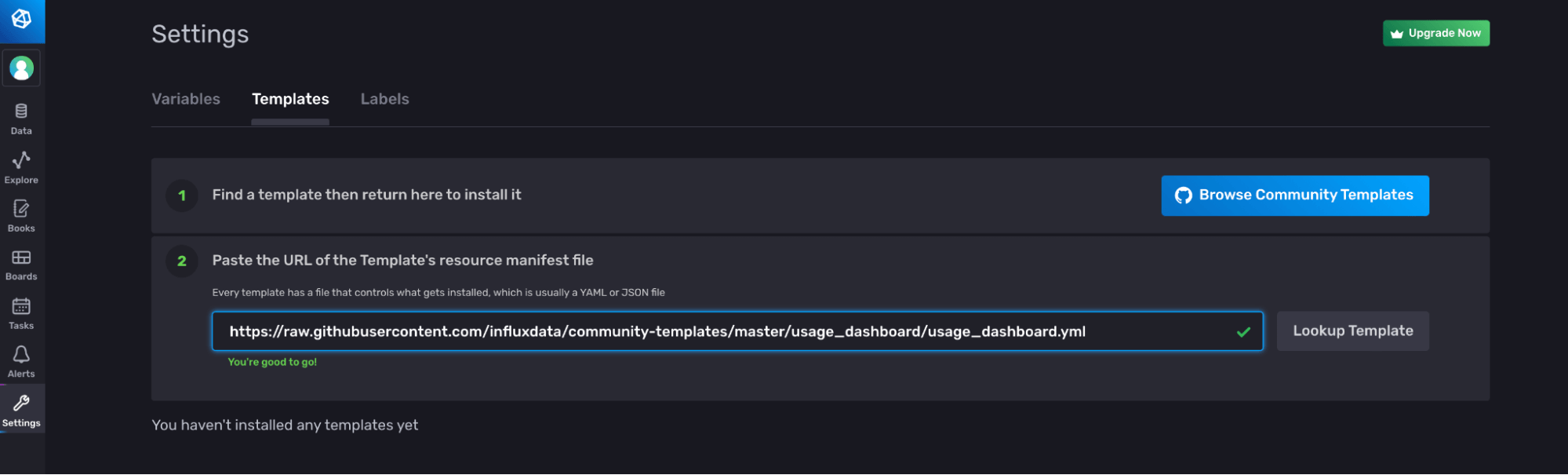

如果您是 InfluxData 的新手,InfluxDB 模板 是预先配置且可共享的仪表板、任务、警报、Telegraf 配置等的集合。您可以通过从 社区模板 复制感兴趣的模板的 URL 并将其粘贴到 UI 中来应用它们。今天,我们将把运行监控模板应用到我们的 InfluxDB Cloud 帐户,以监控我们的基数和任务执行情况。

如何通过 UI 应用模板的示例。导航到“设置”页面上的“模板”选项卡,然后粘贴要应用的模板的 YAML 或 JSON 链接。

运行监控模板包含以下资源

1. 1 [Bucket](https://docs.influxdb.org.cn/influxdb/v2.0/organizations/buckets/): cardinality

2. 1 [Task](https://docs.influxdb.org.cn/influxdb/v2.0/process-data/): cardinality_by_bucket that runs every hour. This task is responsible for calculating the cardinality of all of your buckets and writing the calculation to the cardinality bucket.

3. 1 [Label](https://docs.influxdb.org.cn/influxdb/v2.0/visualize-data/labels/): operational_monitoring. This label helps you find the resources that are part of this template more easily within the UI.

4. 2 [Variables](https://docs.influxdb.org.cn/influxdb/v2.0/visualize-data/variables/): bucket and measurement.

5. 2 [Dashboards](https://docs.influxdb.org.cn/influxdb/v2.0/visualize-data/dashboards/):

* Task Summary Dashboard. This dashboard displays information about your task runs, successes and failures.

* Cardinality Explorer. This dashboard helps you visualize your series cardinality for all of the buckets. Drill down into your measurement cardinality to [solve your runaway cardinality](https://influxdb.org.cn/blog/solving-runaway-series-cardinality-when-using-influxdb/) problem.

了解任务摘要仪表板

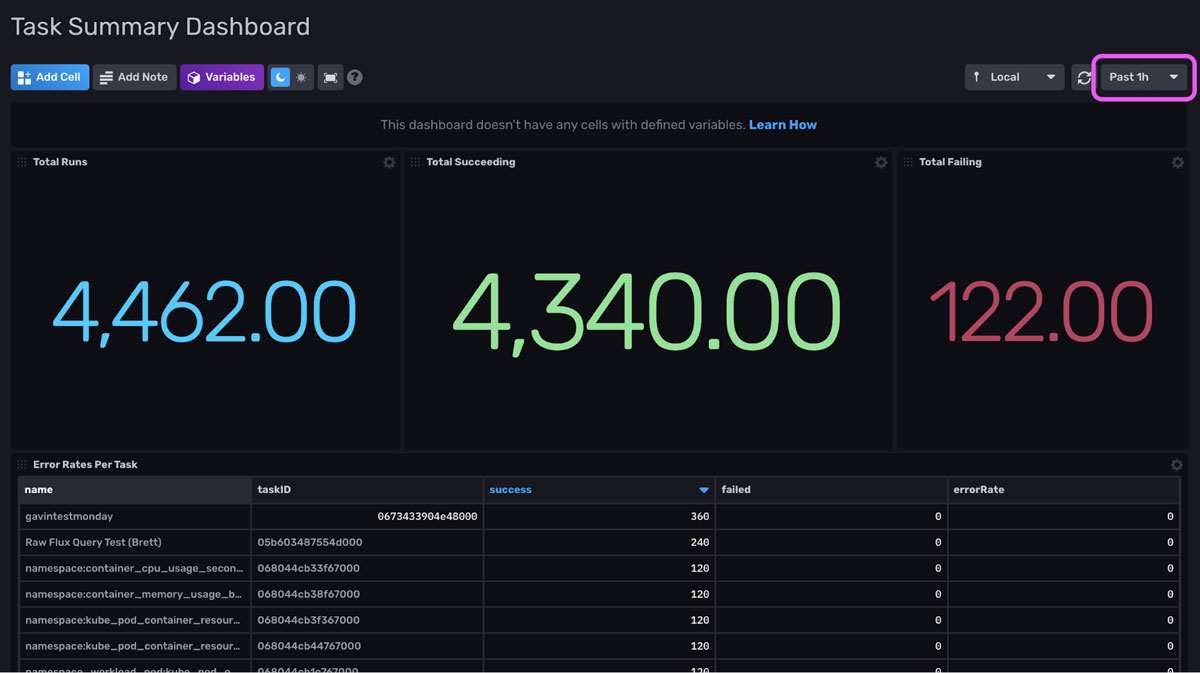

任务摘要仪表板和基数资源管理器仪表板都可用于监控您的特定任务执行状态和序列增长。任务摘要仪表板如下所示

任务摘要仪表板示例。它提供有关过去一小时内任务运行成功情况的信息,由时间范围下拉配置(粉红色方块)控制。

任务摘要仪表板示例。它提供有关过去一小时内任务运行成功情况的信息,由时间范围下拉配置(粉红色方块)控制。

此仪表板的前三个单元格允许您轻松评估指定时间范围内所有任务的成功情况。从此屏幕截图中,我们可以看到我们有 80 个失败的任务运行。第二行的单元格允许我们轻松地按时间范围内每个任务的最成功和失败运行次数进行排序。它为我们提供了错误率和任务 ID。

更多任务摘要仪表板。它提供有关过去一小时内任务运行成功情况的信息。

更多任务摘要仪表板。它提供有关过去一小时内任务运行成功情况的信息。

该仪表板还包括一个错误列表单元格。此单元格包含有关所有失败运行的信息,以及每个 runID 的各个 errorMessages。您可以使用此单元格来确定任务失败的原因。“一段时间内的错误率”下方的内容可以让您轻松确定失败任务的数量是否在增加。线性线表示同一组任务失败,而指数线则表明越来越多的任务失败。

更多任务摘要仪表板。它提供有关过去一小时内任务运行成功情况的信息。

更多任务摘要仪表板。它提供有关过去一小时内任务运行成功情况的信息。

任务摘要仪表板的最后一个单元格,“每个任务的最后一次成功运行”,可帮助您识别任务何时失败,以便您更轻松地调试任务。现在我们已经回顾了任务摘要仪表板的功能,让我们来看看基数资源管理器仪表板。

使用您自己的任务警报补充模板

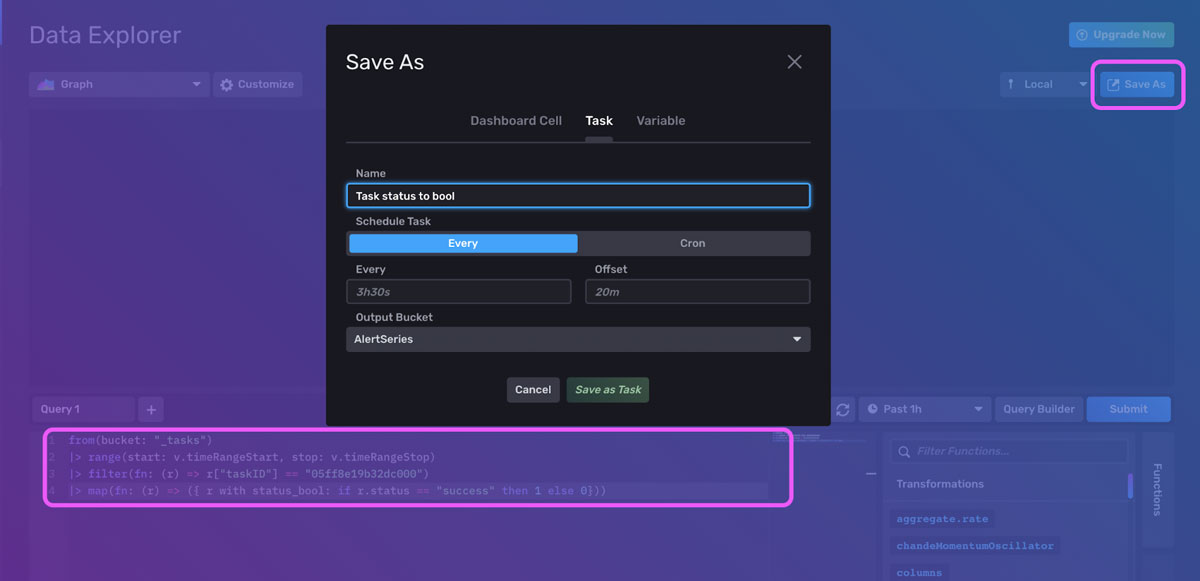

如果您正在运行对您的时间序列用例至关重要的任务,我建议设置警报以监控这些任务何时失败。使用任务摘要仪表板帮助您找到与您要发出警报的任务关联的 taskID。现在我们需要将状态转换为数值,以便为失败的任务创建阈值警报。接下来,导航到数据资源管理器。使用 map() 函数使用以下 Flux 脚本将状态转换为布尔值

from(bucket: "_tasks")

|> range(start: v.timeRangeStart, stop: v.timeRangeStop)

|> filter(fn: (r) => r["taskID"] == "05ff8e19b32dc000")

|> map(fn: (r) => ({ r with status_bool: if r.status == "success" then 1 else 0}))

单击“另存为”按钮,将其转换为警报。然后创建一个阈值警报,并在 status_bool 的值低于 1 时发出警报。

了解基数资源管理器仪表板

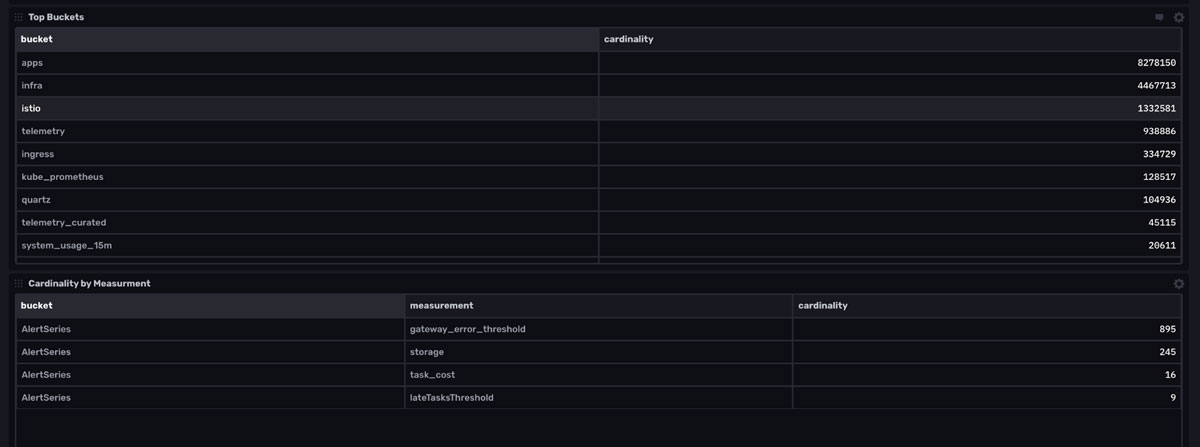

基数资源管理器仪表板提供 InfluxDB 实例中每个桶的基数。

基数资源管理器仪表板示例。它提供有关 InfluxDB 实例基数的信息。标签允许您使用下拉配置(橙色)可视化指标。

基数资源管理器仪表板示例。它提供有关 InfluxDB 实例基数的信息。标签允许您使用下拉配置(橙色)可视化指标。

仪表板顶部的变量允许您轻松地在桶和测量之间切换。

- 第一个单元格“按桶的基数”中的图表允许您一目了然地监控所有桶的基数。我们可以看到我们的基数保持在相同的范围内。所有桶的基数都具有较小的标准偏差,这是一个好兆头。它允许我们验证我们没有序列基数问题。在此桶之上创建 其他警报 以在基数超过预期标准偏差时通知您可能是有利的。基数下降可能是成功的数据过期或 数据删除 的证据。基数峰值可能是由于新摄取的数据与即将被逐出的旧数据之间 momentarily overlap 造成的。也可能是峰值是由重要事件引起的。

- 第二个单元格“顶部桶”提供最大桶及其基数的表格视图。

- 第三个单元格“按测量的基数”允许您深入了解每个桶的特定测量,由桶和测量变量选择。如果您遇到失控的序列基数,这对于查找其来源特别有用。

- 使用最后一个单元格(未显示)“按标签的基数”来查找无界标签。值得注意的是,用于执行这些计算的 Flux 脚本非常巧妙。

在下一节中,我们将学习 cardinality_by_bucket 任务的工作原理,以帮助我们理解这些单元格如何生成此基数数据,因为查询遵循与任务相同的逻辑。

基数任务说明

基数任务 cardinality_by_bucket 包含以下 Flux 代码

import "influxdata/influxdb"

buckets()

|> map(fn: (r) => {

cardinality = influxdb.cardinality(bucket: r.name, start: -task.every)

|> findRecord(idx: 0, fn: (key) =>

(true))

return {

_time: now(),

_measurement: "buckets",

bucket: r.name,

_field: "cardinality",

_value: cardinality._value,

}

})

|> to(bucket: "cardinality")

Flux influxdata/influxdb 包当前包含一个 Flux 函数,influxdb.cardinality()。此函数返回特定桶内数据的序列基数。buckets() 函数返回桶列表。这里的 map() 函数有效地“迭代”桶名称列表。它在 自定义函数 cardinality 中使用 findRecord() 来返回每个桶的序列基数。return 语句创建一个新表,其中包含字段键对应的 r.name 和字段值对应的 cardinality._value。然后将此数据写入我们的“基数”桶。

使用您自己的基数警报补充模板

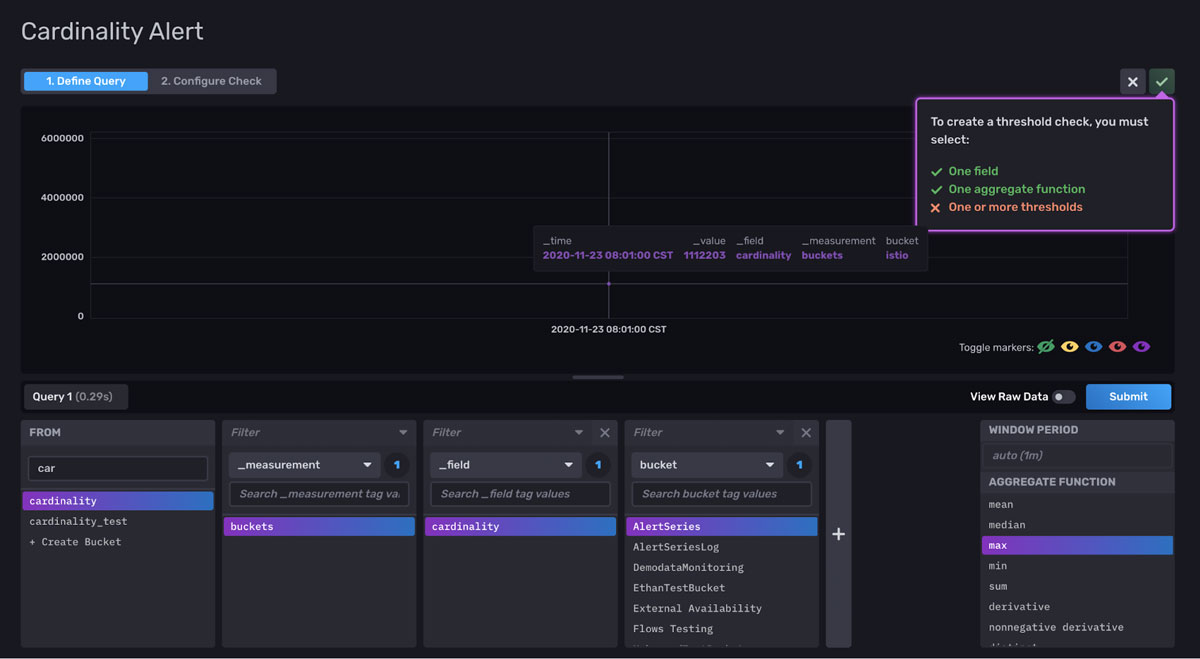

为了充分利用此模板,我建议您根据基数桶设置一个基数阈值警报。创建警报的最快方法是通过用户界面。在用户界面中导航到“**警报**”选项卡。首先查询您的基数。尽管很难在图表可视化(“**警报**”用户界面的默认可视化类型)中可视化我们的基数数据,但我可以将鼠标悬停在点上或在“**原始数据视图**”中查看数据,以便更好地理解我的数据。

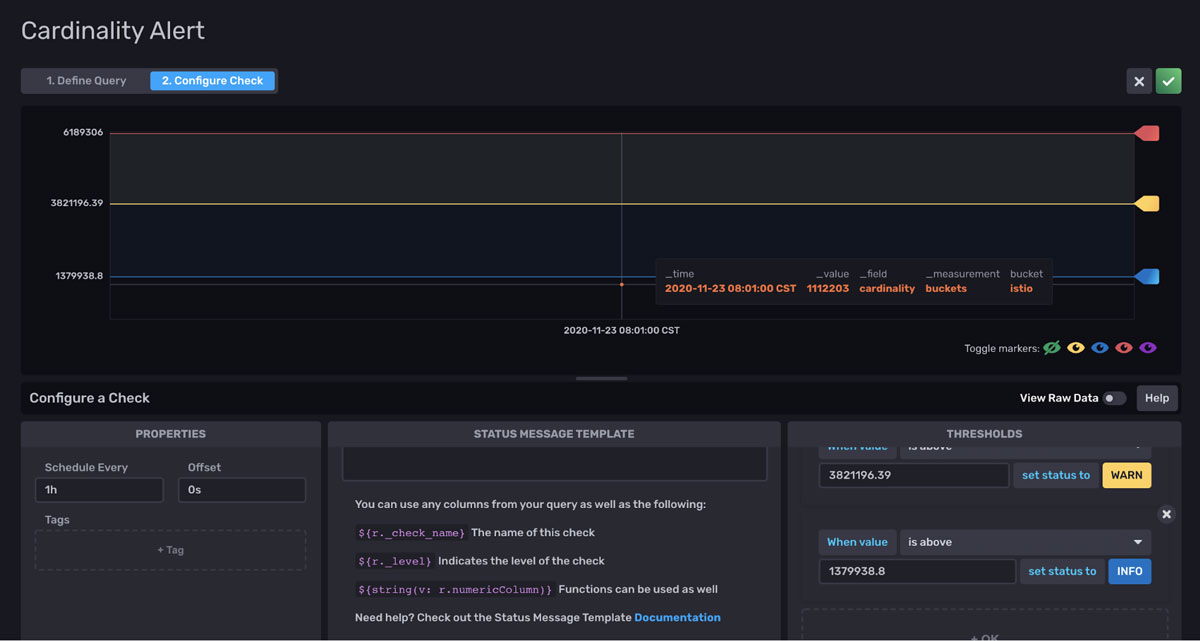

我注意到我的基数数据可以大致分为三个范围。接下来,我将围绕这些范围配置警报。如果我的桶中的基数超过这三个阈值中的任何一个,我将收到警报。

这种类型的警报可以帮助您避免在 InfluxDB Cloud 中达到基数限制。具体来说,免费套餐和基于使用量的套餐用户希望在接近其分别为 10,000 的基数限制和 100 万的初始基数限制的 80% 时收到警报。您还可以考虑创建一个任务,该任务将所有桶中的基数相加,并对总基数发出警报。

如果您注意到基数正在显着增长,您可以决定是否需要请求更高的基数限制,或者是否需要对历史数据进行降采样。要请求更高的基数限制,请单击 InfluxDB 用户界面右上角的“**立即升级**”按钮。

基数资源管理器仪表板的初始数据延迟

请注意,在 cardinality_by_bucket 任务运行之前,基数资源管理器仪表板将为空。它每 1 小时运行一次,因此该仪表板可能需要一段时间才能填充有意义的数据。但是,如果您需要立即可视化您的基数数据,您可以重新利用 cardinality_by_bucket 任务中的脚本,并选择您希望从中收集基数数据的时间范围。然后,如果您愿意,可以使用 to() 函数将基数数据写入基数桶。使用 subDuration() 函数创建正确的时间戳。

import "influxdata/influxdb"

import "experimental"

time = experimental.subDuration(

d: 7d,

from: now(),

)

buckets()

|> map(fn: (r) => {

cardinality = influxdb.cardinality(bucket: r.name, start: -7d)

|> findRecord(idx: 0, fn: (key) =>

(true))

return {

_time: time,

_measurement: "buckets",

bucket: r.name,

_field: "cardinality",

_value: cardinality._value,

}

})

InfluxDB Cloud 使用情况模板

使用 InfluxDB Cloud 使用情况模板 了解您的使用情况,并在达到限制之前采取主动措施。在本节中,我们将把 InfluxDB Cloud 使用情况模板应用于我们的 InfluxDB Cloud 帐户,以监控我们的使用情况和速率限制事件。当用户超过其 Cloud 帐户的使用限制时,会发生速率限制事件。使用以下命令通过 CLI 应用 InfluxDB Cloud 使用情况模板

influx apply --file https://raw.githubusercontent.com/influxdata/community-templates/master/usage_dashboard/usage_dashboard.yml

或者,您可以使用用户界面应用模板。导航到“设置”页面上的“**模板**”选项卡,然后粘贴要应用的模板的 YAML 或 JSON 的链接。

InfluxDB Cloud 使用情况模板包含以下资源

- 1 个 仪表板:使用情况仪表板。此仪表板使您可以跟踪您的 InfluxDB Cloud 数据使用情况和限制事件。

- 1 个 任务:基数限制警报(使用情况仪表板),每小时运行一次。此任务负责确定您的基数是否已超过与您的 Cloud 帐户关联的基数限制。

- 1 个 标签:usage_dashboard。此标签可帮助您更轻松地在用户界面内和通过 API 找到属于此模板一部分的资源。

使用情况仪表板说明

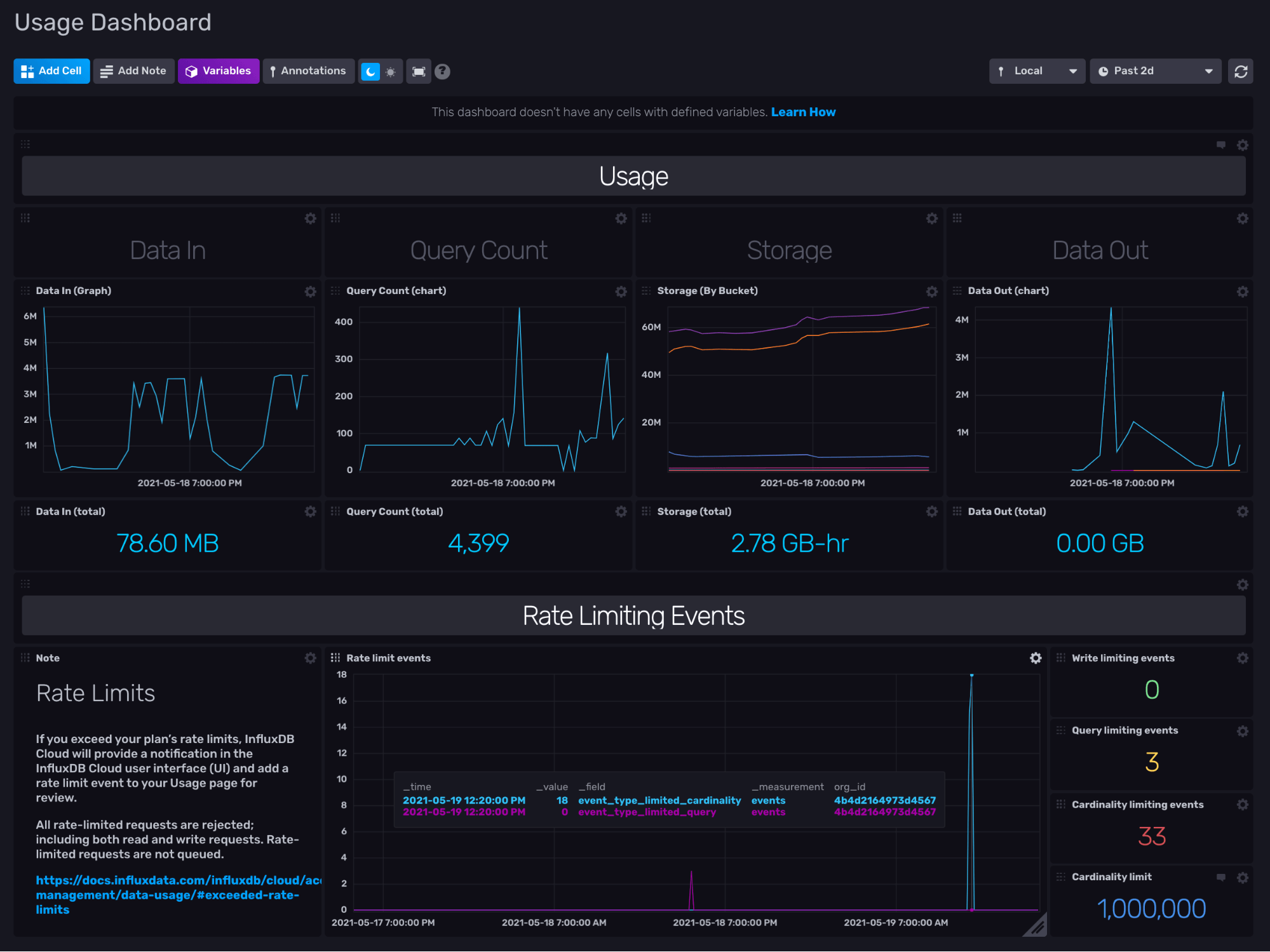

InfluxDB Cloud 使用情况模板包含使用情况仪表板,可让您了解数据输入、查询计数、存储、数据输出和速率限制事件。

仪表板中的单元格显示以下信息

- 数据输入(图表)通过 /write 端点可视化写入 InfluxDB 组织的总字节数。

- 查询计数(图表)可视化已执行的查询数。

- 存储(按桶)可视化每个桶和所有桶的总字节数。

- 数据输出(图表)通过 /query 端点可视化从 InfluxDB 组织查询的总字节数。

- 速率限制事件可视化达到限制的次数

- 速率限制事件按事件类型在右下角的单元格中划分,以显示写入限制、查询限制和基数限制事件的数量以及组织的总基数限制。

所有这些可视化都会自动将数据聚合到 1 小时的分辨率。本质上,此仪表板补充了 InfluxDB 用户界面中“使用情况”页面中的数据,以便让您更深入地了解您的使用情况。

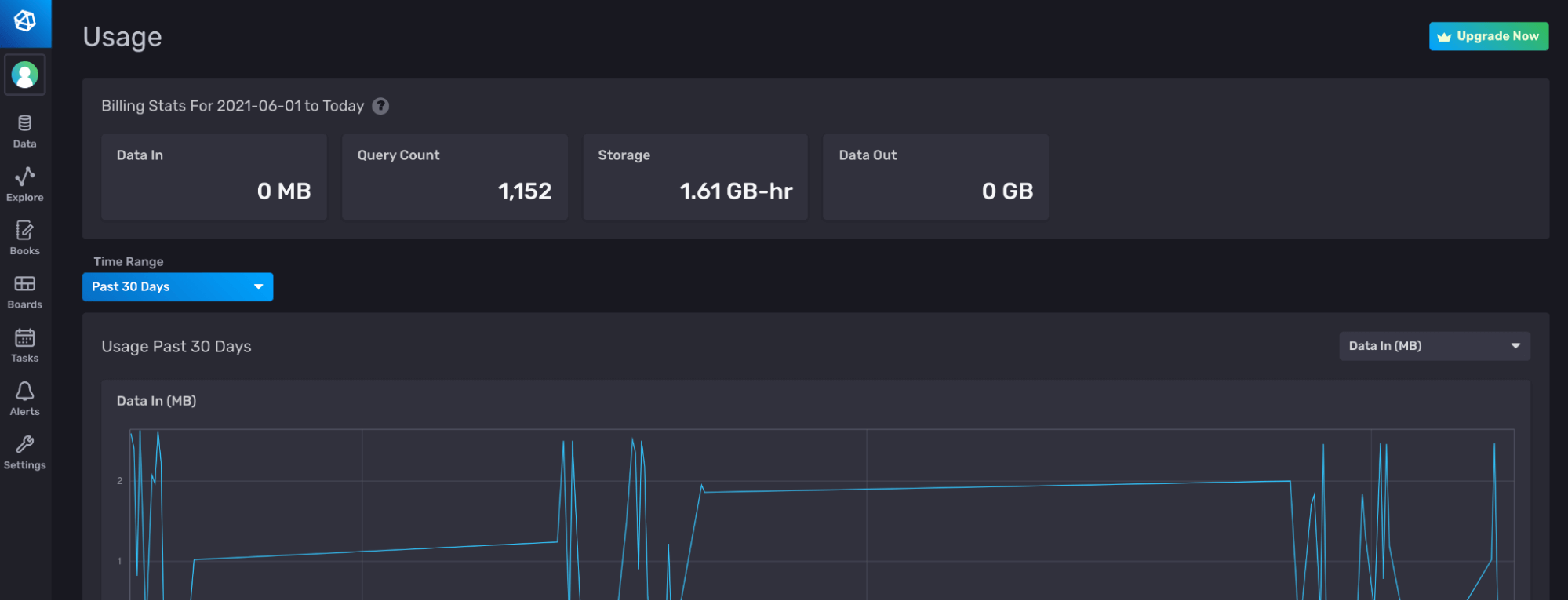

InfluxDB 用户界面中的“使用情况”页面包含有关您使用情况的常规信息。

InfluxDB 用户界面中的“使用情况”页面包含有关您使用情况的常规信息。

了解使用情况仪表板背后的 Flux

为了完全理解 InfluxDB Cloud 使用情况模板,您必须了解使用情况仪表板背后的 Flux。让我们看一下在第一个单元格中生成数据输入(图表)可视化的查询。

import "math"

import "experimental/usage"

usage.from(

start: v.timeRangeStart,

stop: v.timeRangeStop,

)

|> filter(fn: (r) =>

r._measurement == "http_request"

and (r.endpoint == "/api/v2/write" or r.endpoint == "/write")

and r._field == "req_bytes"

)

|> group()

|> keep(columns: ["_value", "_field", "_time"])

|> fill(column: "_value", value: 0)

|> map(fn: (r) =>

({r with

write_mb: math.round(x: float(v: r._value) / 10000.0) / 100.0

}))

首先,我们导入所有相关的包来执行生成我们所需可视化的 Flux 查询。Flux math 包提供基本的数学函数和常数。Flux experimental/usage 包提供用于收集与您的 InfluxDB Cloud 帐户相关的使用情况和使用限制数据的函数。InfluxDB Cloud 使用情况模板中的所有单元格、仪表板和任务都使用 Flux experimental/usage 包。usage.from() 函数返回使用情况数据。我们过滤来自任何写入的“req_bytes”字段的使用情况数据。然后,我们对写入数据进行分组,用 0 填充任何空行,并计算兆字节数并将值存储在新列“write_mb”中。

探索 experimental/usage Flux 包

experimental/usage 包包含两个函数:usage.from() 和 usage.limits()。

usage.from() 函数映射到 InfluxDB v2 API 中的 org/{orgID}/usage 端点。usage.from() 函数返回您的 InfluxDB 组织的使用情况数据。usage.from() 函数要求您指定 start 和 stop 参数。默认情况下,该函数以小时总和的形式返回聚合的使用情况数据。此默认降采样使您可以高效地查询长时间段内的使用情况数据。如果要查看原始高分辨率数据,请在短时间范围内(几小时)查询您的数据,并设置 raw:true。默认情况下,usage.from() 返回您当前正在使用的 InfluxDB 组织的使用情况数据。要查询外部 InfluxDB Cloud 组织,请提供 host、orgID 和 token 参数。usage.from() 返回具有以下架构的使用情况数据

- _time:使用情况事件的时间(

raw:true)或降采样的使用情况数据(raw:false)。 - _bucket_id:每个桶特定使用情况的桶 ID,例如 storage_usage_bucket_bytes 测量值 - 标签。

- _org_id:从中查询使用情况数据的组织 ID - 标签。

- _endpoint:从中收集使用情况数据的 InfluxDB v2 API 端点 - 标签

- _status:HTTP 状态代码 - 标签。

- _measurement:测量值。

- http_request

- storage_usage_buckets_bytes

- query _count

- events

- _field:字段键

- resp_bytes:一个响应中的字节数或

raw:true。在降采样的数据中,raw:false在每个端点上每小时求和。 - gauge:在 _time 指示的时间点,bucket_id 标签指示的桶中的字节数。如果您的桶具有无限保留策略,则从您开始向桶写入数据时起 req_bytes 的总和将等于您的 gauge 值。

- req_bytes:

raw:true的一个请求中的字节数。在降采样的数据中,raw:false在每个端点上每小时求和。 - event_type_limited_cardinality:基数限制事件的数量。

- event_type_limited_query:查询限制事件的数量。

- event_type_limited_write:写入限制事件的数量。

- resp_bytes:一个响应中的字节数或

usage.limits() 函数映射到 InfluxDB v2 API 中的 org/{orgID}/limits 端点。usage.limits() 返回与您的 InfluxDB Cloud 组织关联的使用限制的记录,特别是 json 对象。要在 VS Code 或 InfluxDB 用户界面中返回记录,我建议使用 array.from 函数,如下所示

import "experimental/usage"

import "array"

limits = usage.limits()

array.from(rows: [{

orgID: limits.orgID,

wrte_rate: limits.rate.writeKBs,

query_rate: limits.rate.readKBs,

bucket: limits.bucket.maxBuckets,

task: limits.maxTasks,

dashboard: limits.dashboard.maxDashboards,

check: limits.check.maxChecks,

notificationRule: limits.notificationRule.maxNotifications,

}])

上面的 Flux 实质上是使用 array.from 函数将 json 输出转换为带注释的 CSV。以下是 usage.limits() 返回的 json 对象的示例,可帮助您理解嵌套 json 键的来源。

{

orgID: "123",

rate: {

readKBs: 1000,

concurrentReadRequests: 0,

writeKBs: 17,

concurrentWriteRequests: 0,

cardinality: 10000

},

bucket: {

maxBuckets: 2,

maxRetentionDuration: 2592000000000000

},

task: {

maxTasks: 5

},

dashboard: {

maxDashboards: 5

},

check: {

maxChecks: 2

},

notificationRule: {

maxNotifications: 2,

blockedNotificationRules: "comma, delimited, list"

},

notificationEndpoint: {

blockedNotificationEndpoints: "comma, delimited, list"

}

}

使用基数限制警报任务

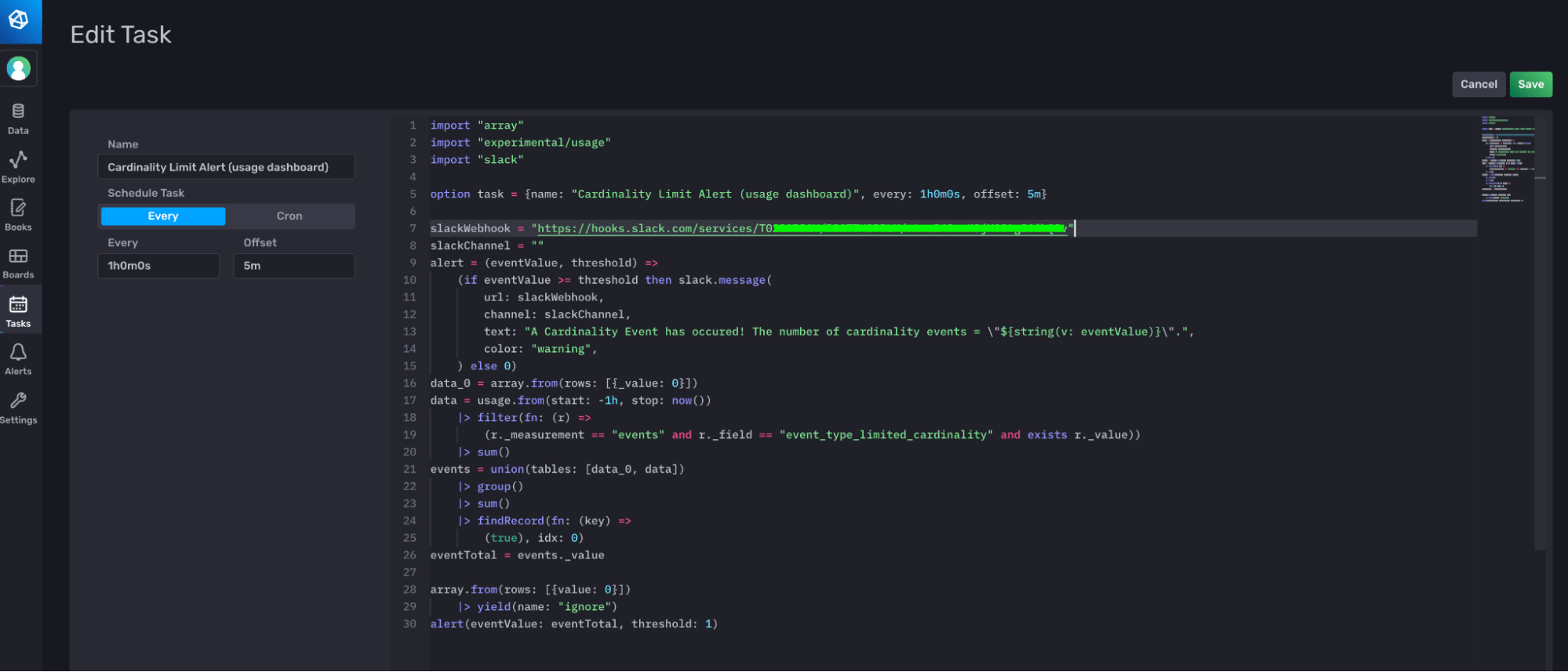

基数限制警报任务负责确定何时达到或超过基数限制,并将警报发送到 Slack 端点。为了使此任务正常工作并将警报发送到您的 Slack,您必须编辑任务并提供 webhook。您可以直接通过用户界面编辑任务,也可以使用 CLI 和 Visual Studio Code 的 Flux 扩展。对于这一行编辑,我建议使用用户界面。

导航至“任务”页面,点击“基数限制警报”任务进行编辑,编辑第 7 行并提供您的 Slack webhook,然后点击保存以使“基数限制警报”任务生效。

导航至“任务”页面,点击“基数限制警报”任务进行编辑,编辑第 7 行并提供您的 Slack webhook,然后点击保存以使“基数限制警报”任务生效。